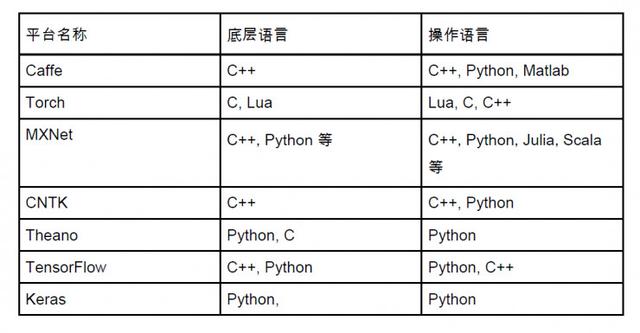

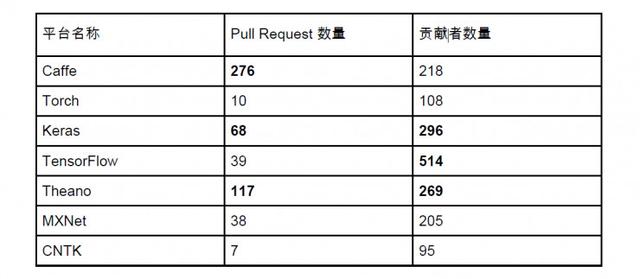

The content of this article is shared by Dr. Peng Hesen, a machine learning scientist at Microsoft's US headquarters, and is personally compiled by Dr. Peng Hesen. Just like the programming language, the deep learning open source framework also has its own advantages and disadvantages and applicable scenarios. How can AI practitioners choose these platforms to play deep learning? This issue invites Dr. Peng Hesen, a machine learning scientist who has worked for Google, Amazon, and Microsoft, to talk about "MXNet Fire, How AI Practitioners Should Choose Deep Learning Open Source Framework." Dr. Peng Hesen witnessed and deeply participated in the process of deep learning of the three giants. Guest introduction Peng Hesen, Ph.D., Emory University. He is currently a computer learning scientist at Microsoft's US headquarters and a senior researcher at Microsoft's Bing Advertising Department. His current research interests are in natural language processing and machine learning in advertising and recommendation systems. In addition, Dr. Peng Hesen was the youngest machine learning researcher at Amazon and previously worked for the Google Econometrics Department and the Chinese Academy of Sciences. Deep learning is a very popular field. Now there are many very good platforms on the market. I believe everyone is thinking at the beginning of the trip. How should such a platform choose? Let me mention two things first, which may be something that the general evaluation did not consider: One is image calculation and symbolic derivation, which is a very interesting and very important by-product of deep learning. The other is the touchable depth of the deep learning framework, which is directly related to the development of the deep learning system and the user's freedom. These two points are very important for beginners and practitioners, I will cover them in detail later. First of all, congratulations to MXNet recently received Amazon's endorsement, MXNet platform itself is very good, with many excellent properties: such as multi-node model training, is currently the most comprehensive multi-language support I know. In addition, there are also reviews that MXNet performance can be much higher than the peer platform, which we will mention in the discussion that follows. Now that we are on the topic, how do we choose a deep learning open source platform, what should the reference standard look like? First, the five reference standards for deep learning open source platform The platforms (or software) that are mainly discussed today include: Caffe, Torch, MXNet, CNTK, Theano, TensorFlow, Keras. How to choose a deep learning platform? I have summarized the following considerations. It varies from person to person and varies from project to project. Maybe you are doing image processing, natural language processing, or quantitative finance. The choices you make for the platform may vary depending on your needs. Standard 1: Ease of integration with existing programming platforms and skills Whether it is academic research or engineering development, many development experiences and resources have been accumulated before the in-depth study of the subject. Perhaps your favorite programming language has been established, or your data has been stored in a certain form, or the requirements for the model (such as delays) are different. Standard 1 considers the ease with which deep learning platforms integrate with existing resources. Here we will answer the following questions: Do you need to learn a new language specifically for this? Can it be combined with existing programming languages? Standard 2: Closeness to the ecological integration of related machine learning and data processing In the end, we do a lot of software processing, visualization, statistical inference and other software packages. Here we have to answer the question: Is there a convenient data pre-processing tool before modeling? Of course, most platforms have their own preprocessing tools such as images and text. After modeling, is there a convenient tool for analysis of results, such as visualization, statistical inference, and data analysis? Standard 3: What else can you do beyond deep learning through this platform? Many of the platforms we mentioned above are specifically developed for deep learning research and applications. Many platforms have powerful optimizations for distributed computing, GPU and other architectures. Can you use these platforms/software to do other things? For example, some deep learning software can be used to solve quadratic optimization; some deep learning platforms are easily extended and used in reinforcement learning applications. Which platforms have such characteristics? This problem can be related to one aspect of today's deep learning platform, namely image computing and automated derivation. Standard 4: Requirements for data volume, hardware, and support Of course, the amount of data for deep learning in different application scenarios is different, which leads us to the problem of distributed computing and multi-GPU computing. For example, people working on computer image processing often need to segment image files and computing tasks onto multiple computer nodes for execution. At present, each deep learning platform is developing rapidly, and each platform's support for distributed computing and other scenarios is also evolving. Some of the content mentioned today may not be applicable in a few months. Standard 5: The maturity of the deep learning platform The maturity consideration is a more subjective consideration. The factors I consider personally include: the level of community activity; whether it is easy to communicate with developers; the current application momentum. After talking about the five reference standards, we then use the above criteria to evaluate each deep learning platform: Second, deep learning platform evaluation Judgement 1: The difficulty of integrating with existing programming platforms and skills Standard 1 considers the ease with which deep learning platforms integrate with existing resources. Here we will answer the following question: Do you need to learn a new language specifically for this? Can it be combined with existing programming languages? The dry goods for this problem are in the table below. Here we summarize the underlying language and user language of each deep learning platform, and you can get the following table. Keras is modeled by Theano, TensorFlow as the bottom layer. We can see this trend: The deep learning underlying language is mostly C++ / C, which can achieve high operational efficiency. The operating language is often close to reality, and we can roughly conclude that Python is the operating platform language for deep learning in the future. Microsoft has added support for Python in CNTK 2.0. Of course, there are many platforms that can configure the network and train the model through scripting. In terms of the pattern, Python is determinable as the basic language for deep learning modeling. If your favorite programming language is Python, congratulations, most platforms work seamlessly with your technology. If you are Java, don't worry, many platforms also have Java support, and Deeplearning4J is a native Java deep learning platform. Standard 2: Closeness to the ecological integration of related machine learning and data processing Here we would like to mention the main data processing tools nowadays. The more comprehensive data analysis tools include R and its related ecology, Python and its related ecology. The niche also includes Julia and its related ecology. After the completion of tasks such as deep learning modeling, integration with the ecology is also particularly important. We can see that the above is more integrated with Python, R, where Keras Eco (TensorFlow, Theano), CNTK, MXNet, Caffe, etc. have a lot of advantages. At the same time, Caffe has a large number of image processing packages, which also has great advantages for data observation. Standard 3: What else can you do beyond deep learning through this platform? The picture below is the core of this open class: In fact, the deep learning platform has different emphasis on creation and design. We can divide the deep learning platform into the above six aspects according to the function: CPU + GPU control, communication: This lowest level is the basic level of deep learning. Memory, variable management layer: This layer contains definitions for specific single intermediate variables, such as defining vectors and matrices, and performing memory space allocation. Basic operation layer: This layer mainly includes basic arithmetic operations such as addition, subtraction, multiplication and division, sine, cosine function, maximum and minimum. Basic simple function: ○ Contains various excitation functions (acTIvaTIon funcTIon), such as sigmoid, ReLU, etc. ○ Also includes the derivation module Basic neural network modules, including Dense Layer, ConvoluTIon Layer, LSTM and other common modules. The last layer is the integration and optimization of all neural network modules. Many machine learning platforms are different in terms of functionality, and I divided them into four categories: 1. The first category is a deep learning functional platform based on Caffe, Torch, MXNet, and CNTK. This type of platform provides a very complete set of basic modules that allow developers to quickly create deep neural network models and start training to solve most of today's deep learning problems. But these modules rarely expose the underlying computing functions directly to the user. 2. The second category is a deep learning abstraction platform based on Keras. Keras itself does not have the ability to coordinate the underlying operations. Keras relies on TensorFlow or Theano for underlying operations, while Keras itself provides neural network module abstraction and process optimization in training. It allows users to enjoy rapid modeling while having convenient secondary development capabilities and adding their favorite modules. 3. The third category is TensorFlow. TensorFlow draws on the strengths of existing platforms, allowing users to touch underlying data and has ready-made neural network modules that allow users to model very quickly. TensorFlow is a very good cross-border platform. 4. The fourth category is Theano, The earliest platform software for deep learning, focusing on the underlying basic operations. Therefore, the choice of platform can be selected according to the above requirements according to the above requirements: If the mission goal is very certain and only needs to be short-lived, then the Type 1 platform will be right for you. If you need some underlying development and don't want to lose the convenience of existing modules, then the 2nd and 3rd platforms will work for you. If you have backgrounds in statistics, computational mathematics, etc., and want to use existing tools for some computational development, then categories 3, 4 will suit you. Here I introduce some of the by-products of deep learning. One of the more important functions is symbolic derivation. Graph calculation and symbolic derivation: the profound contribution of deep learning to the open source community You may have questions: I can train a deep learning model, which is good. Why do you need to touch the bottom layer? Here I will introduce some of the by-products of deep learning. One of the more important functions is symbolic derivation. Symbolic English is Symbolic Differentiation, and there are many related literature and tutorials available. What does symbolic guidance mean? In the past, we did research such as machine learning. If the guide is required, it is often necessary to manually calculate the derivative of the objective function. Recently, some deep learning tools, such as Theano, have introduced automated symbol derivation, which greatly reduces the workload of developers. Of course, commercial software such as MatLab, Mathematica has been a symbolic computing function many years ago, but due to the limitations of its commercial software, symbolic calculations have not been widely adopted in machine learning applications. Deep learning Due to the complexity of its network, it is necessary to use the symbolic derivation method to solve the problem that the objective function is too complicated. Other non-deep learning problems, such as quadratic optimization, can also be solved with these deep learning tools. Even better, the Theano symbol derivation results can be compiled directly through the C program, become the underlying language, and run efficiently. Here we give an example of Theano: 》》》 import numpy 》》》 import theano 》》》 import theano.tensor as T 》》》 from theano import pp 》》》 x = T.dscalar('x') 》》》 y = x ** 2 》》》 gy = T.grad(y, x) 》》》 f = theano.function([x], gy) 》》》 f(4) 8 Above we use the method of symbol derivation, it is easy to find the value of y about the derivative of x at the point of 4. Standard 4: Requirements for data volume, hardware, and support For multi-GPU support and multi-server support, all of the platforms we mentioned above claim to be able to complete their tasks. At the same time, there is a large amount of literature that the effect of a certain platform is even better. Here we leave the choice of the specific platform to everyone here, providing the following information: First think about what you want to do. In deep learning applications, scenes that need to be applied to a multi-server training model often only have one image processing. If it is natural language processing, its work can often be done on a well-configured server. If the amount of data is large, data preprocessing can often be performed by tools such as Hadoop, which can be reduced to a range that can be handled by a single machine. I am a relatively traditional person. I have been tossing up the compilation of various scientific computing software since I was a child. The result of the mainstream literature now is that a single-machine GPU can be tens of times more efficient than CPU efficiency. But in fact, there are some problems. In the Linux environment, when converting Numpy to linear function package to Intel MLK, you can also get similar improvements. Of course, many evaluations nowadays often result in different results in different hardware environments and network configurations. Even if you test on the Amazon cloud platform, it may cause completely different results due to network environment, configuration, and so on. So for all kinds of assessments, based on my experience, the advice is: take it with a grain of salt, you have to keep your mind. The main tool platforms we mentioned earlier have different levels of support for multi-GPU and multi-node model training, and now they are also developing rapidly. We recommend that listeners identify themselves according to their needs. Standard 5: The maturity of the deep learning platform Judging the maturity level is often subjective and the conclusions are mostly controversial. I only list data here, how to choose it, and everyone's judgment. Here we determine the level of activity of the platform through several popular numbers on Github. These data were obtained this afternoon (2016-11-25). We have highlighted the top three platforms for each factor in bold: The first factor is the number of contributors. The contributors are defined very broadly. The questions raised in the Github issues are counted as Contributors, but they can still be used as a measure of the popularity of the platform. We can see that Keras, Theano, TensorFlow, three deep learning platforms with Python as the native platform are the most contributors. The second factor is the number of Pull Requests, and the Pull Request measures the developmental level of a platform. We can see that Caffe has the highest Pull Request, which may be due to its unique advantage in the image field, and Keras and Theano are once again on the list. In addition, these platforms have a focus on the application scenario: Natural language processing, of course, must be the first to push CNTK. Microsoft MSR (A) has contributed a lot to natural language processing for many years. Many developers of CNTK are also distributed computing cows, and the methods used are very unique. Of course, for a very broad application and learning, the Keras/TensorFlow/Theano ecology may be your best choice. For computer image processing, Caffe may be your best choice. About the future of deep learning platforms: Microsoft is very determined about CNTK, the Python API is well added, and everyone can pay more attention. Some people think that the deep learning model is a strategic asset and should use domestic software to prevent monopoly. I don't think there is such a problem. First of all, software such as TensorFlow is open source and can be checked by code review. In addition, the trained model can be saved in HDF5 format and shared across platforms, so the probability of becoming a Google monopoly is very small. It is very likely that in the future, everyone will train some very powerful convolution layer, which can solve all computer image related problems very well. At this time, we only need to call these convolution layers. No large-scale convolutional layer training is required. In addition, these convolutional layers may be hardwareized and become a small module of our mobile phone chip, so that when our photo is taken, the convolution operation is completed.

Laser Engraving Logo Wood Usb Flash Drive Memoria Usb 4GB 8GB 2.0 3.0

1. Make the logo on usb as the customers design

2. Can preload the file intousb and make the file non-delete

3. The advertisement file can be Auto-play when plug usb into the computer

4. Add more value to your giveaway by adding a Key Chain,Lanyard Neck String or USB Extension Cable

5. Ask us about a wide selection of packaging options,including variety of gift boxes and customized clam shells

6. Making encryption to protect your data

Wooden Usb Flash Disk,Biodegradable Card Usb Flash Dis,Environment-Friendly Card Usb Flash Disk,Flash Disk MICROBITS TECHNOLOGY LIMITED , https://www.hkmicrobits.com