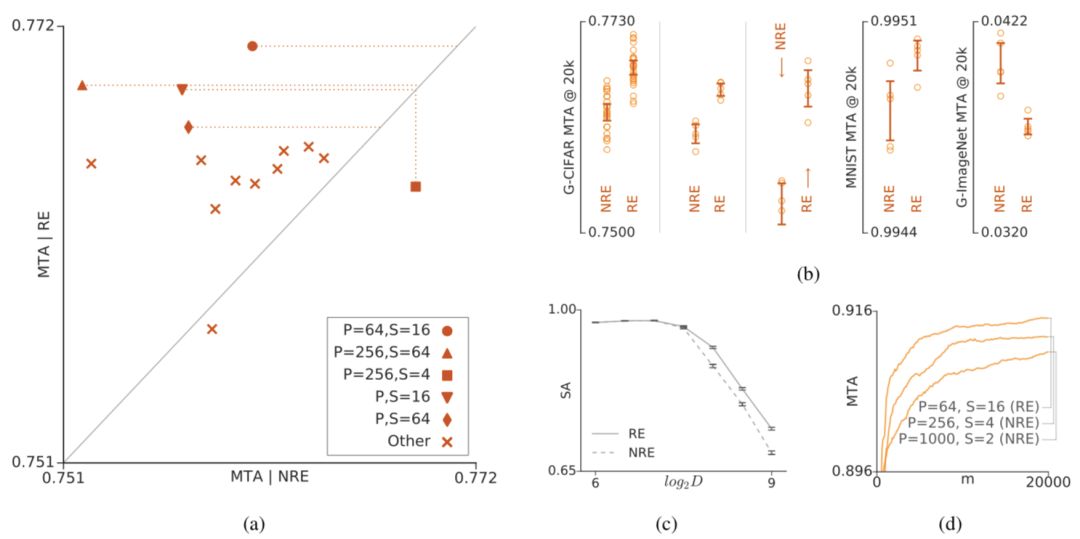

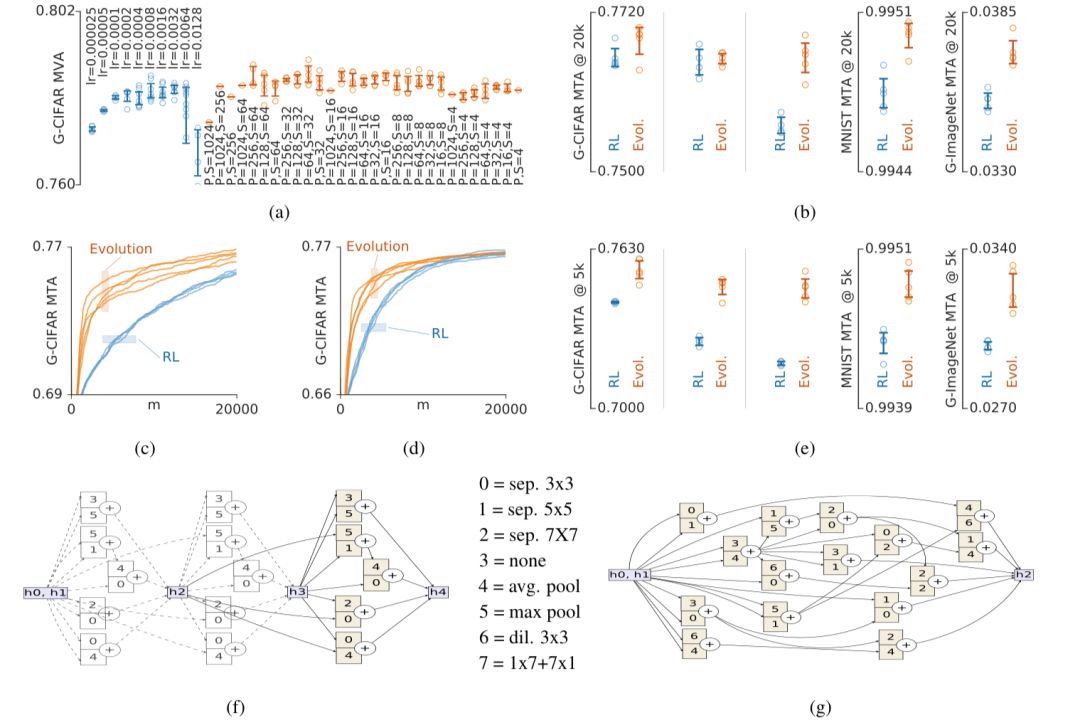

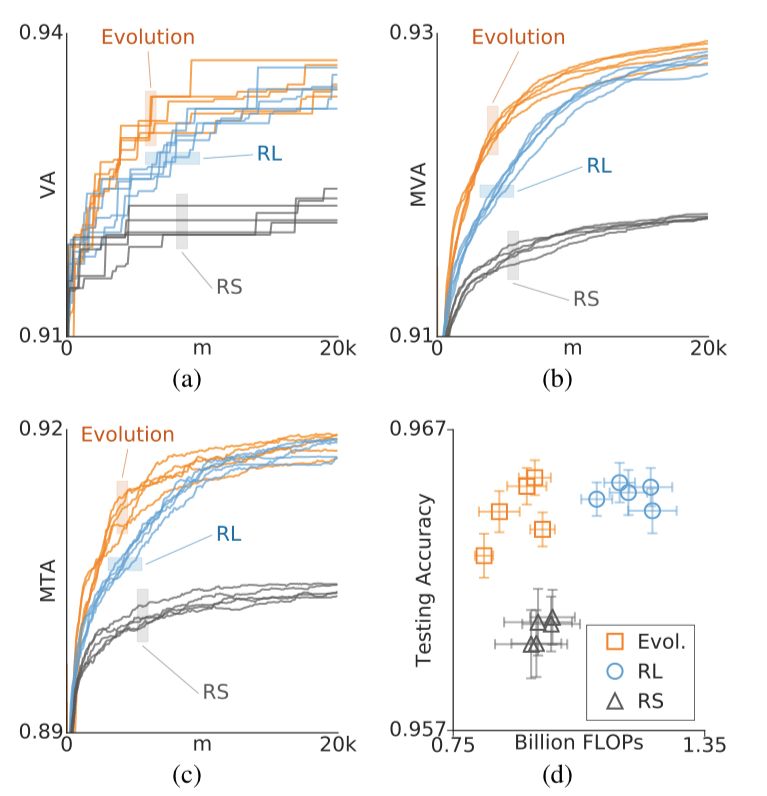

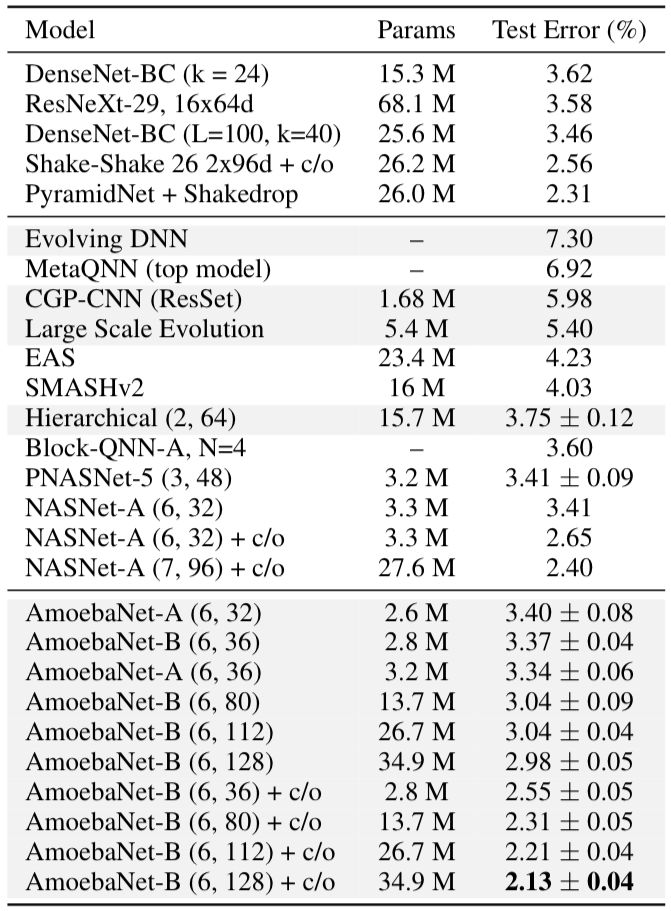

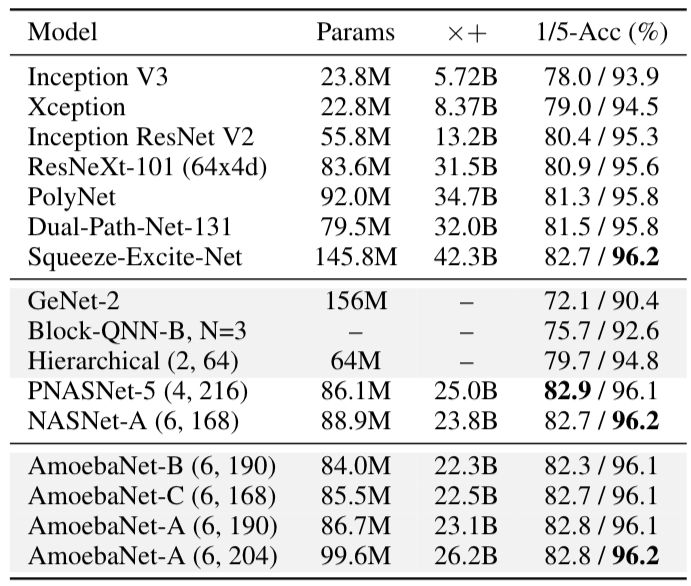

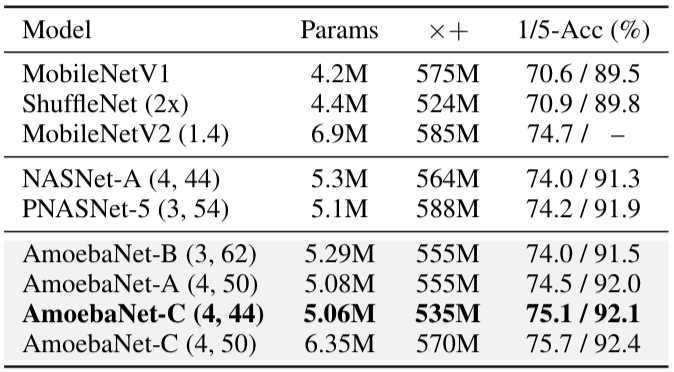

The success of neural networks has recently expanded the architecture of the model and led to the emergence of architectural search, the neural network auto-learning architecture. The traditional approach to architectural search is neural evolution. Today, hardware development can achieve large-scale evolution, producing image classification models that are comparable to manual design. However, the new technology, while feasible, does not allow developers to decide which method to use in a specific environment (ie search space and dataset). In this paper, researchers use a regularized version of the popular asynchronous evolutionary algorithm and compare it to non-regularized forms and reinforcement learning methods. Hardware conditions, computational power, and neural network training code are all the same, where researchers explore the performance of models in different data sets, search spaces, and scales. The following is a summary of the compilation of the paper. experimental method We use different algorithms to search the space of the neural network classifier. After the baseline study, the best model obtained will be scaled to produce a higher quality image classifier. We perform the search process on different computing scales. In addition, we also studied evolutionary algorithms in non-neural network simulations. 1. Search space All neural evolution and reinforcement learning experiments use the search space design of the baseline study, which requires looking for two module architectures similar to Inception, which are stacked in a feedforward pattern to form an image classifier. 2. Architecture search algorithm For evolutionary algorithms, we use a tournament selection or a regularized variant. The standard league selection algorithm is a periodic improvement of the number of training models P. In each cycle, a sample of an S model is randomly selected. The best model of the sample will generate another model with a varying schema that will be trained and then added to the model sample. The worst model will be removed. We call this method non-regular evolution (NRE). Its variant, regularized evolution (RE), is a natural correction: instead of deleting the worst model in the sample, the oldest model in the sample (the first trained model) is deleted. In NRE and RE, the architecture of sample initialization is random. 3. Experimental setup In order to compare evolutionary algorithms with reinforcement learning algorithms, we will experiment on different computational scales. Small scale trial The first experiment can be performed on the CPU. We deployed SP-I, SP-II and SP-III search spaces and experimented with G-CIFAR, MNIST or G-ImageNet data sets. Large scale experiment Then deploy the settings for the baseline study. Here only the SP-I search space and the CIFAR-10 data set are used, and the two models are trained on 450 GPUs for nearly 7 days. 4. Model extension We want to transform the evolutionary algorithm or the structure of the reinforcement learning discovery into a full-size, accurate model. The expanded model will be trained on CIFAR-10 or ImageNet with the same procedure as the baseline study. Experimental result The comparison between regularization and non-regularization. (a) shows the comparison of small-scale experimental results with different meta-parameters for non-regularized evolution and regularized evolution on the G-CIFAR data set. P represents the number of samples and S represents the sample size. (b) shows the performance of NRE and RE in five different situations, from left to right: G-CIFAR/SP-I, G-CIFAR/SP-II, G-CIFAR/SP-III, MNIST/SP -I and G-ImageNet/SP-I. (c) represents the simulation result, the vertical axis represents the accuracy of the simulation, and the horizontal axis represents the dimension of the problem. (d) shows three large scale trials conducted on CIFAR-10. Next, we conducted small-scale experiments on reinforcement learning and evolutionary algorithms under different conditions. The results are as follows: (a) shows an experimental summary of the optimization of hyperparameters on G-CIFAR, with the vertical axis representing the average effective accuracy of the top 100 models in the experiment. The results show that all methods are not sensitive enough. (b) Also in the five different cases of the model: G-CIFAR/SP-I, G-CIFAR/SP-II, G-CIFAR/SP-III, MNIST/SP-I and G-ImageNet/SP -I. (c) and (d) show the performance details of the model on G-CIFAR/SP-II and G-CIFAR/SP-III, respectively, and the horizontal axis represents the number of models. (e) indicates that in the case of limited resources, it may be necessary to stop the experiment as soon as possible. It shows that in the initial state, the accuracy of the evolutionary algorithm grows much faster than the reinforcement learning. (f) and (g) are the top architectures of SP-I and SP-III, respectively. A small-scale experiment was compared, followed by a large-scale experiment. The results are shown below, yellow for evolutionary algorithms and blue for reinforcement learning: Except for the (d) diagram, all horizontal axes represent the number of models (m). The three graphs (a), (b), and (c) show the three algorithms in the same experiment five times. The evolutionary algorithm and the reinforcement learning experiment use the best meta-parameters. After evolutionary experiments, we identified the best model and named it AmoebaNet-A. By adjusting N and F, we can reduce the test error rate, as shown in Table 1: Table 1 Baseline studies obtained NASNet-A under the same experimental conditions. Table 2 shows that in the CIFAR-10 data set, AmoebaNet-A has a lower error rate when matching parameters, and fewer parameters when matching errors. At the same time, the performance on ImageNet is also the best at present. Table 2 Finally, we compared the manual design, other architectures, and the performance comparison of our models, the accuracy rate is higher than the other two. table 3 Conclusion Large-scale experimental process diagrams show that both reinforcement learning and evolutionary algorithms are close to the general precision asymptote, so we need to focus on which algorithm arrives faster. The figure shows that reinforcement learning takes twice as long to reach the highest precision, in other words, the evolutionary algorithm is about twice as fast as reinforcement learning. But we ignore the effect of further quantification. In addition, the size of the search space needs further evaluation. Large spaces require less professional resources, while smaller spaces can get results faster and better. Therefore, it is difficult to distinguish which search algorithm is better in a small space. However, this research is only the first empirical study to analyze the relationship between evolutionary algorithms and reinforcement learning in a specific environment. We hope that future work can further summarize the two and explain the advantages of the two methods. Dongguan Tuojun Electronic Technology Co., Ltd , https://www.fibercablessupplier.com